AI is giving scammers a dangerous new edge—especially in grandparent hoaxes

- Replies 0

It may start with a phone call in the middle of the night.

A familiar voice pleads for help—maybe your grandchild, maybe your child.

They’re in trouble, they say. They need money. Fast. But what if that voice isn’t theirs at all?

A new twist on a cruel scam is gaining ground, and it’s powered by artificial intelligence.

Criminals are now using AI voice-cloning tools to mimic the voices of loved ones, making grandparent scams harder to detect—and more emotionally devastating.

Scammers need just one minute of audio—something as simple as a short clip from social media—to recreate a convincing version of a family member’s voice.

And for seniors, that could mean a terrifying phone call that sounds exactly like someone they love.

Steve Weisman, a professor at Bentley University and founder of scamicide.com, says these AI-driven scams are becoming more common—and more sophisticated.

“You get the call, it's in the middle of the night, it's either your child, you're grandchild – and the voice is that of your child or grandchild.”

He explained. “They’re able to make it sound exactly like that person.”

In one recent case, 25 people were arrested in Canada for running a grandparent scam operation targeting Americans.

In just three years, they stole $21 million. And that’s just one group.

Using spoofing technology, scammers make the caller ID match a family member’s name or a local number, which makes the call look even more real.

In some cases, scammers pose as a lawyer or police officer.

In others, they go one step further—sending “money mules” to the victim’s front door to collect cash in person.

These tactics can catch even the most cautious off guard.

And although anyone can fall for a scam, older adults are often singled out because scammers believe they’re more likely to answer unknown calls, less familiar with technology, and eager to help their family.

But knowledge is power. And experts say there are steps every family can take to fight back.

If you get a call from a family member in distress, don’t panic. Hang up and call them back using a number you know is real.

Consider setting up a family password—a word or phrase only your inner circle knows.

It can be a simple way to confirm identity during an emergency.

Explore more on AI:

Have you or someone you know received a strange or unsettling phone call like this? Do you have advice on staying alert—or questions about how AI is shaping new scams? Share your story in the comments. Together, we can stay one step ahead.

A familiar voice pleads for help—maybe your grandchild, maybe your child.

They’re in trouble, they say. They need money. Fast. But what if that voice isn’t theirs at all?

A new twist on a cruel scam is gaining ground, and it’s powered by artificial intelligence.

Criminals are now using AI voice-cloning tools to mimic the voices of loved ones, making grandparent scams harder to detect—and more emotionally devastating.

Scammers need just one minute of audio—something as simple as a short clip from social media—to recreate a convincing version of a family member’s voice.

And for seniors, that could mean a terrifying phone call that sounds exactly like someone they love.

Steve Weisman, a professor at Bentley University and founder of scamicide.com, says these AI-driven scams are becoming more common—and more sophisticated.

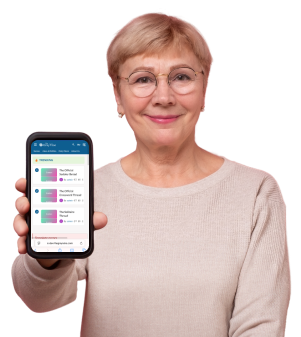

Artificial intelligence making grandparent scams even more cunning. Image source: Growtika / Unsplash

“You get the call, it's in the middle of the night, it's either your child, you're grandchild – and the voice is that of your child or grandchild.”

He explained. “They’re able to make it sound exactly like that person.”

In one recent case, 25 people were arrested in Canada for running a grandparent scam operation targeting Americans.

In just three years, they stole $21 million. And that’s just one group.

Using spoofing technology, scammers make the caller ID match a family member’s name or a local number, which makes the call look even more real.

In some cases, scammers pose as a lawyer or police officer.

In others, they go one step further—sending “money mules” to the victim’s front door to collect cash in person.

These tactics can catch even the most cautious off guard.

And although anyone can fall for a scam, older adults are often singled out because scammers believe they’re more likely to answer unknown calls, less familiar with technology, and eager to help their family.

But knowledge is power. And experts say there are steps every family can take to fight back.

If you get a call from a family member in distress, don’t panic. Hang up and call them back using a number you know is real.

Consider setting up a family password—a word or phrase only your inner circle knows.

It can be a simple way to confirm identity during an emergency.

Explore more on AI:

- Robot goes haywire—raising new questions about AI safety and control

- When AI goes too far: Sexualized content mimicking Down syndrome sparks outrage

- AI clones daughter's voice in chilling scam – Could your family be next?

Key Takeaways

- Criminals are now using AI voice cloning to impersonate family members in grandparent scams, often requiring just one minute of audio from social media.

- A recent case in Canada saw 25 people arrested for stealing $21 million from American seniors using these tactics.

- Scammers use spoofing to fake caller ID numbers and may even send money mules to victims’ homes to collect cash directly.

- Experts recommend setting up a family password, verifying calls independently, limiting online audio sharing, and reporting suspicious calls to the FTC.