Is the future here? Scientists unveil device that lets you speak without talking

- Replies 0

Imagine a world where the barriers of communication are broken down, not by learning new languages, but by technology that can translate your very thoughts into speech.

For individuals who have lost the ability to speak due to paralysis or other conditions, this isn't just a daydream—it's a beacon of hope on the horizon.

Here at The GrayVine, we're excited to share a groundbreaking development that's turning the stuff of science fiction into reality.

A team of visionary engineers from the University of California has pioneered a brain-computer interface (BCI) system that's nothing short of revolutionary.

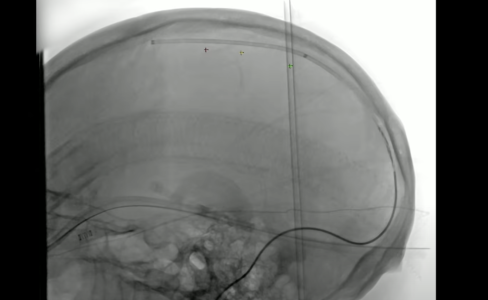

Engineers from the University of California developed a pioneering brain-computer interface (BCI) that uses electrodes placed on the scalp to monitor brain activity and detect brainwaves.

These brainwaves are then analyzed and translated into speech by a computer system, with artificial intelligence vocalizing the interpreted thoughts.

According to the researchers, this breakthrough could give individuals with paralysis a new way to communicate by turning motor cortex signals—associated with speech—into audible language.

Even in people who have lost the physical ability to speak, the motor cortex continues to produce the neural signals for speech.

To tap into those signals, the researchers used sophisticated AI to process the data from the motor cortex and convert it into sound in about one second—enabling real-time speech without noticeable delay.

A Glimpse into Ann's Journey

The BCI was tested on a woman named Ann, who has severe paralysis and is unable to speak. She had been part of an earlier trial with the same research group, though their earlier version of the system had an eight-second delay.

Kaylo Littlejohn, a Ph.D. candidate in UC Berkeley’s Department of Electrical Engineering and a co-leader of the study, said, “We wanted to see if we could generalize to the unseen words and really decode Ann’s patterns of speaking.”

“We found that our model does this well, which shows that it is indeed learning the building blocks of sound or voice,” the researchers noted, as their system successfully captured and replicated patterns tied to individual speech elements.

Ann, who became paralyzed after a brainstem stroke in 2005, shared that the brainwave-to-speech device gave her a renewed sense of agency.

The technology, she said, allowed her to feel “more in control of her communication” and immediately “more connected to her body.”

Although brainwave decoding technology is still in its early stages, past studies have shown limited capabilities—often restricted to identifying only a few individual words, not complete phrases or sentences.

But researchers at the University of California believe their latest proof-of-concept, recently published in Nature Neuroscience, signals a promising leap forward. They’re optimistic it will allow them to “make advances at every level.”

The Path to Seamless Speech Synthesis

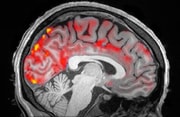

Several brain regions are involved in speech, especially the motor cortex, which creates distinctive patterns—or “fingerprints”—for each word.

Specific signals are generated when someone prepares to say a word like “hello” or “goodbye,” involving coordinated activity with the lips, tongue, vocal cords, and even breath control.

To train their system, researchers developed a “naturalistic speech synthesizer” that relied on brainwave data collected from Ann as she attempted to speak simple phrases like “Hey, how you?”

You might like: This everyday speech habit might signal early Alzheimer’s–Are you at risk?

Though Ann couldn’t physically talk, her brain continued to send speech signals, which the electrodes detected and transmitted. These signals were divided into small segments representing portions of each sentence.

This technology is still in its early stages, but the implications are staggering. Previous attempts at decoding brain waves into speech were limited to a handful of words.

Now, the team from California has made strides in creating full sentences, marking a significant leap forward in the field.

The team also used recordings of Ann’s voice from before her stroke. This allowed the AI to produce simulated speech that closely resembled her natural voice using a text-to-speech model.

During testing, Ann was shown prompts such as “Hello, how are you?” and mentally practiced saying them.

Even without producing audible speech, her brain activity mimicked real speaking, enabling the AI to pick up and interpret the motor cortex signals.

Over time, the AI began learning her unique speech patterns. Eventually, Ann could express words not included in her original training set, such as “Alpha” and “Bravo.”

The system even learned to recognize words that hadn’t been directly visualized, filling in missing pieces to form complete, coherent sentences.

Dr. Gopala Anumanchipalli, one of the study’s co-leaders and an electrical engineering professor at UC Berkeley, said, “We can see relative to that intent signal, within 1 second, we are getting the first sound out. And the device can continuously decode speech, so Ann can keep speaking without interruption.”

Dr. Kaylo Littlejohn, another co-lead and Ph.D. student at UC Berkeley, emphasized the importance of their real-time speech capability: “Previously, it was not known if intelligible speech could be streamed from the brain in real time.”

Brain-computer interface (BCI) technology is attracting increased attention from both scientists and industry leaders.

In 2023, Brown University’s BrainGate consortium tested a similar system on Pat Bennett, an ALS patient, by implanting sensors in her cerebral cortex.

Over 25 sessions, AI software learned to identify phonemes—basic units of speech like “sh” or “th”—based on her neural activity.

The system then fed the decoded brain signals into a language model, which transformed them into readable text on a screen.

When the vocabulary was limited to 50 words, the error rate was about 9 percent, but it rose to 23 percent when expanded to 125,000 words—covering nearly everything someone might want to say.

While the study didn’t specify how many words the model had fully learned by the end, researchers said it demonstrated that machine learning could recognize thousands of speech elements, marking a significant milestone.

Also read: Are you ignoring this "mini" health issue? New study reveals it could be killing your brain cells!

Similar Development

In a parallel development, Elon Musk’s company Neuralink made headlines in January 2024 by implanting its first brain chip into 29-year-old Noland Arbaugh, the initial participant in its clinical trial.

In 2016, Arbaugh experienced a traumatic brain injury that left him paralyzed from the shoulders down.

Years later, he was selected to take part in a groundbreaking clinical trial involving Neuralink’s brain-computer interface (BCI), a device that enables the brain to interact directly with external technologies like smartphones or computers.

The Neuralink implant, placed in Arbaugh’s brain, connects to over 1,000 electrodes embedded in his motor cortex.

These electrodes detect the electrical activity of neurons when they fire—such as when he thinks about moving his hand—and send those signals wirelessly to a digital application. This lets Arbaugh control devices using only his thoughts.

He describes using the Neuralink system as similar to training a computer cursor, saying it's like learning to move it “left or right based on cues,” and that the system gradually adapts to understand his intentions.

Five months into using the device, Arbaugh says it’s already made a significant difference in his life—especially when it comes to texting, where he can now “send messages in seconds.”

He makes use of a virtual keyboard and a personalized dictation feature, and even enjoys games like chess and Mario Kart using the same mind-controlled cursor system.

Source: UC San Francisco (UCSF) / Youtube.

Meanwhile, the University of California team behind a separate BCI speech project sees this moment as a major leap forward in the pursuit of developing natural, fluent communication through brain interfaces.

Dr. Kaylo Littlejohn commented, “That’s ongoing work, to try to see how well we can actually decode these paralinguistic features from brain activity,” referring to emotional tone and inflection in speech.

“This is a longstanding problem even in classical audio synthesis fields and would bridge the gap to full and complete naturalism,” he added.

Read next: The remarkable transformation of a paralyzed man after receiving Elon Musk’s Neuralink implant

Have you or someone you know been affected by a loss of speech? What do you think about the potential of this technology to change lives? Share your thoughts and stories in the comments below!

For individuals who have lost the ability to speak due to paralysis or other conditions, this isn't just a daydream—it's a beacon of hope on the horizon.

Here at The GrayVine, we're excited to share a groundbreaking development that's turning the stuff of science fiction into reality.

A team of visionary engineers from the University of California has pioneered a brain-computer interface (BCI) system that's nothing short of revolutionary.

Engineers from the University of California developed a pioneering brain-computer interface (BCI) that uses electrodes placed on the scalp to monitor brain activity and detect brainwaves.

These brainwaves are then analyzed and translated into speech by a computer system, with artificial intelligence vocalizing the interpreted thoughts.

According to the researchers, this breakthrough could give individuals with paralysis a new way to communicate by turning motor cortex signals—associated with speech—into audible language.

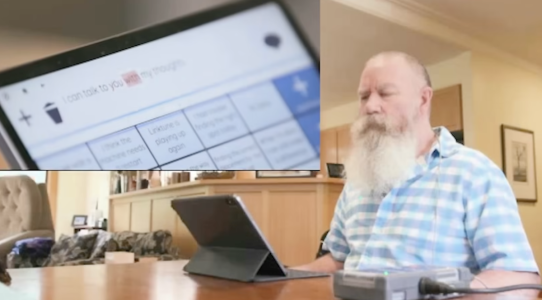

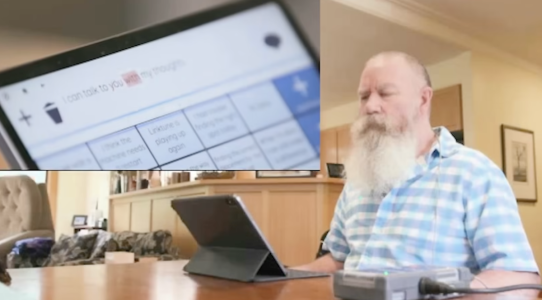

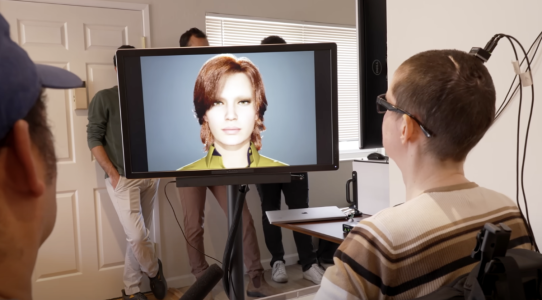

A breakthrough brain-computer interface (BCI) has been developed that can read brainwaves. Image source: CNET / Youtube.

Even in people who have lost the physical ability to speak, the motor cortex continues to produce the neural signals for speech.

To tap into those signals, the researchers used sophisticated AI to process the data from the motor cortex and convert it into sound in about one second—enabling real-time speech without noticeable delay.

A Glimpse into Ann's Journey

The BCI was tested on a woman named Ann, who has severe paralysis and is unable to speak. She had been part of an earlier trial with the same research group, though their earlier version of the system had an eight-second delay.

Kaylo Littlejohn, a Ph.D. candidate in UC Berkeley’s Department of Electrical Engineering and a co-leader of the study, said, “We wanted to see if we could generalize to the unseen words and really decode Ann’s patterns of speaking.”

“We found that our model does this well, which shows that it is indeed learning the building blocks of sound or voice,” the researchers noted, as their system successfully captured and replicated patterns tied to individual speech elements.

Ann, who became paralyzed after a brainstem stroke in 2005, shared that the brainwave-to-speech device gave her a renewed sense of agency.

The technology, she said, allowed her to feel “more in control of her communication” and immediately “more connected to her body.”

Although brainwave decoding technology is still in its early stages, past studies have shown limited capabilities—often restricted to identifying only a few individual words, not complete phrases or sentences.

It can also convert thoughts into speech, which could aid people with severe paralysis in communicating. Image source: CNET / Youtube.

But researchers at the University of California believe their latest proof-of-concept, recently published in Nature Neuroscience, signals a promising leap forward. They’re optimistic it will allow them to “make advances at every level.”

The Path to Seamless Speech Synthesis

Several brain regions are involved in speech, especially the motor cortex, which creates distinctive patterns—or “fingerprints”—for each word.

Specific signals are generated when someone prepares to say a word like “hello” or “goodbye,” involving coordinated activity with the lips, tongue, vocal cords, and even breath control.

To train their system, researchers developed a “naturalistic speech synthesizer” that relied on brainwave data collected from Ann as she attempted to speak simple phrases like “Hey, how you?”

You might like: This everyday speech habit might signal early Alzheimer’s–Are you at risk?

Though Ann couldn’t physically talk, her brain continued to send speech signals, which the electrodes detected and transmitted. These signals were divided into small segments representing portions of each sentence.

This technology is still in its early stages, but the implications are staggering. Previous attempts at decoding brain waves into speech were limited to a handful of words.

Now, the team from California has made strides in creating full sentences, marking a significant leap forward in the field.

The team also used recordings of Ann’s voice from before her stroke. This allowed the AI to produce simulated speech that closely resembled her natural voice using a text-to-speech model.

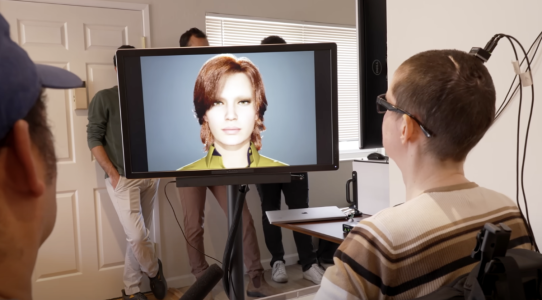

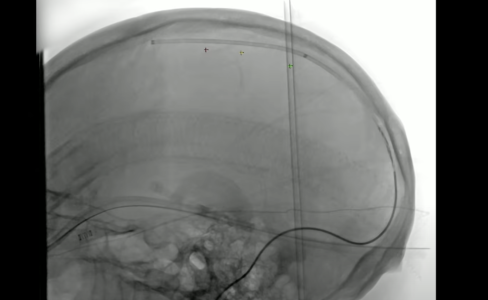

The BCI uses electrodes placed on the scalp to capture brain activity in the motor cortex, which is decoded by AI to produce audible speech using the person's pre-paralysis voice. Image source: UC San Francisco (USCF) / Youtube.

During testing, Ann was shown prompts such as “Hello, how are you?” and mentally practiced saying them.

Even without producing audible speech, her brain activity mimicked real speaking, enabling the AI to pick up and interpret the motor cortex signals.

Over time, the AI began learning her unique speech patterns. Eventually, Ann could express words not included in her original training set, such as “Alpha” and “Bravo.”

The system even learned to recognize words that hadn’t been directly visualized, filling in missing pieces to form complete, coherent sentences.

Dr. Gopala Anumanchipalli, one of the study’s co-leaders and an electrical engineering professor at UC Berkeley, said, “We can see relative to that intent signal, within 1 second, we are getting the first sound out. And the device can continuously decode speech, so Ann can keep speaking without interruption.”

Dr. Kaylo Littlejohn, another co-lead and Ph.D. student at UC Berkeley, emphasized the importance of their real-time speech capability: “Previously, it was not known if intelligible speech could be streamed from the brain in real time.”

Brain-computer interface (BCI) technology is attracting increased attention from both scientists and industry leaders.

In 2023, Brown University’s BrainGate consortium tested a similar system on Pat Bennett, an ALS patient, by implanting sensors in her cerebral cortex.

BCI is helping more and more people communicate with their ‘voice’ again. Image source: UC San Francisco (UCSF) / Youtube.

Over 25 sessions, AI software learned to identify phonemes—basic units of speech like “sh” or “th”—based on her neural activity.

The system then fed the decoded brain signals into a language model, which transformed them into readable text on a screen.

When the vocabulary was limited to 50 words, the error rate was about 9 percent, but it rose to 23 percent when expanded to 125,000 words—covering nearly everything someone might want to say.

While the study didn’t specify how many words the model had fully learned by the end, researchers said it demonstrated that machine learning could recognize thousands of speech elements, marking a significant milestone.

Also read: Are you ignoring this "mini" health issue? New study reveals it could be killing your brain cells!

Similar Development

In a parallel development, Elon Musk’s company Neuralink made headlines in January 2024 by implanting its first brain chip into 29-year-old Noland Arbaugh, the initial participant in its clinical trial.

In 2016, Arbaugh experienced a traumatic brain injury that left him paralyzed from the shoulders down.

Years later, he was selected to take part in a groundbreaking clinical trial involving Neuralink’s brain-computer interface (BCI), a device that enables the brain to interact directly with external technologies like smartphones or computers.

The Neuralink implant, placed in Arbaugh’s brain, connects to over 1,000 electrodes embedded in his motor cortex.

The technology showed significant improvement over previous attempts, enabling near-real-time speech streaming with no delay. Image source: ABC News / Youtube.

These electrodes detect the electrical activity of neurons when they fire—such as when he thinks about moving his hand—and send those signals wirelessly to a digital application. This lets Arbaugh control devices using only his thoughts.

He describes using the Neuralink system as similar to training a computer cursor, saying it's like learning to move it “left or right based on cues,” and that the system gradually adapts to understand his intentions.

The BCI technology has potential for further advancements and is part of a growing field of research. Image source: ABC News / Youtube.

Five months into using the device, Arbaugh says it’s already made a significant difference in his life—especially when it comes to texting, where he can now “send messages in seconds.”

He makes use of a virtual keyboard and a personalized dictation feature, and even enjoys games like chess and Mario Kart using the same mind-controlled cursor system.

Source: UC San Francisco (UCSF) / Youtube.

Meanwhile, the University of California team behind a separate BCI speech project sees this moment as a major leap forward in the pursuit of developing natural, fluent communication through brain interfaces.

Dr. Kaylo Littlejohn commented, “That’s ongoing work, to try to see how well we can actually decode these paralinguistic features from brain activity,” referring to emotional tone and inflection in speech.

“This is a longstanding problem even in classical audio synthesis fields and would bridge the gap to full and complete naturalism,” he added.

Read next: The remarkable transformation of a paralyzed man after receiving Elon Musk’s Neuralink implant

Key Takeaways

- A breakthrough brain-computer interface (BCI) has been developed that can read brainwaves and convert thoughts into speech, which could aid people with severe paralysis in communicating.

- The BCI uses electrodes placed on the scalp to capture brain activity in the motor cortex, which is decoded by AI to produce audible speech using the person's pre-paralysis voice.

- The technology showed significant improvement over previous attempts, enabling near-real-time speech streaming with no delay.

- The BCI technology has potential for further advancements and is part of a growing field of research looking to improve communication for people with severe speech or movement disorders.

Have you or someone you know been affected by a loss of speech? What do you think about the potential of this technology to change lives? Share your thoughts and stories in the comments below!