New voice scams are targeting you—here’s how to outsmart them before it’s too late!

By

Veronica E.

- Replies 0

In a world where technology evolves faster than we can keep up, scammers have found new, sophisticated ways to deceive us. Artificial intelligence (AI) has moved beyond being just a buzzword—it’s now a tool in the hands of fraudsters.

One of the most concerning developments is the rise of AI voice cloning technology, which enables scammers to impersonate people you know and trust, making it more difficult than ever to spot the fraud.

Imagine receiving a call from a loved one’s voice, filled with urgency, asking for money. Your heart races, and your instinct to help takes over.

This is the reality that AI voice scams create. By cloning voices, scammers can trick you into acting quickly and without question, often targeting the most vulnerable.

In 2023, the FBI reported that senior citizens lost around $3.4 billion to financial crimes, with a significant portion attributed to AI-enhanced scams.

With AI's ability to eliminate human errors and produce remarkably convincing content, these scams are becoming harder to detect.

The Grandparent Scam: A Classic Con with a Modern Twist

One of the most heart-wrenching scams is the ‘grandparent scam,’ where fraudsters pose as a grandchild in need of cash.

The addition of AI voice cloning has made this scam more believable and dangerous than ever. What was once a cruel trick has now evolved into an even more sophisticated and convincing attack.

The Psychology Behind the Scam

Chuck Herrin, a chief information security officer, explains that these scams are designed to exploit our emotions, triggering a fear-based response.

When we’re afraid, our judgment can falter, leading us to make hasty decisions—like sending money to a scammer.

Outsmarting the Scammers: Your Defense Strategy

The good news is there are ways to protect yourself and your loved ones from falling victim to these scams.

Cybersecurity experts and law enforcement officials recommend a simple, yet effective, tool: the family safe word.

Creating a Family Safe Word

A family safe word is a unique, pre-agreed word or phrase known only to you and your close family members.

It should be something personal, not easily guessed or found online. Avoid using common identifiers like street names or schools. Instead, choose a phrase that’s meaningful to your family but obscure enough to be safe.

The Power of Verification

Along with a safe word, it’s crucial to establish a verification process. If you ever receive a call asking for financial assistance, insist on the caller providing the safe word before taking any action.

This simple step could be the difference between protecting your assets and falling victim to a scam.

Eva Velasquez, CEO of the Identity Theft Resource Center, warns that even safe words require proper handling.

Family members must understand that they should never volunteer the safe word during a call. The caller should be the one to say it first.

Join the Fight Against AI Voice Scams

We encourage you to:

By staying vigilant and adopting these strategies, you can better protect yourself from the increasing threat of AI voice scams. Knowledge and preparation are your best defenses.

Keep your family safe, and remember—scammers rely on urgency and emotional manipulation, but you can outsmart them by staying informed and verifying any request that seems suspicious.

Have you or someone you know encountered an AI voice scam? Do you have other tips for staying safe? Share your experiences and advice in the comments below. Your insights could help others avoid falling victim to these scams.

One of the most concerning developments is the rise of AI voice cloning technology, which enables scammers to impersonate people you know and trust, making it more difficult than ever to spot the fraud.

Imagine receiving a call from a loved one’s voice, filled with urgency, asking for money. Your heart races, and your instinct to help takes over.

This is the reality that AI voice scams create. By cloning voices, scammers can trick you into acting quickly and without question, often targeting the most vulnerable.

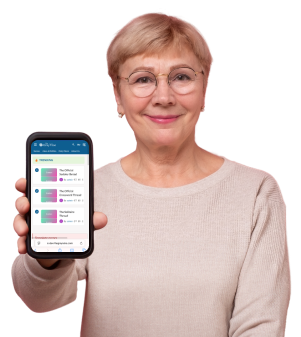

Protect yourself from AI voice scams: stay informed and safeguard your family. Image Source: Pexels / Anna Tarazevich.

In 2023, the FBI reported that senior citizens lost around $3.4 billion to financial crimes, with a significant portion attributed to AI-enhanced scams.

With AI's ability to eliminate human errors and produce remarkably convincing content, these scams are becoming harder to detect.

The Grandparent Scam: A Classic Con with a Modern Twist

One of the most heart-wrenching scams is the ‘grandparent scam,’ where fraudsters pose as a grandchild in need of cash.

The addition of AI voice cloning has made this scam more believable and dangerous than ever. What was once a cruel trick has now evolved into an even more sophisticated and convincing attack.

The Psychology Behind the Scam

Chuck Herrin, a chief information security officer, explains that these scams are designed to exploit our emotions, triggering a fear-based response.

When we’re afraid, our judgment can falter, leading us to make hasty decisions—like sending money to a scammer.

Outsmarting the Scammers: Your Defense Strategy

The good news is there are ways to protect yourself and your loved ones from falling victim to these scams.

Cybersecurity experts and law enforcement officials recommend a simple, yet effective, tool: the family safe word.

Creating a Family Safe Word

A family safe word is a unique, pre-agreed word or phrase known only to you and your close family members.

It should be something personal, not easily guessed or found online. Avoid using common identifiers like street names or schools. Instead, choose a phrase that’s meaningful to your family but obscure enough to be safe.

The Power of Verification

Along with a safe word, it’s crucial to establish a verification process. If you ever receive a call asking for financial assistance, insist on the caller providing the safe word before taking any action.

This simple step could be the difference between protecting your assets and falling victim to a scam.

Eva Velasquez, CEO of the Identity Theft Resource Center, warns that even safe words require proper handling.

Family members must understand that they should never volunteer the safe word during a call. The caller should be the one to say it first.

Join the Fight Against AI Voice Scams

We encourage you to:

- Talk to your family about AI voice scams, stressing the need for skepticism and verification.

- Choose a family safe word and create a clear process for its use.

- Regularly update your security practices to stay one step ahead of scammers.

By staying vigilant and adopting these strategies, you can better protect yourself from the increasing threat of AI voice scams. Knowledge and preparation are your best defenses.

Keep your family safe, and remember—scammers rely on urgency and emotional manipulation, but you can outsmart them by staying informed and verifying any request that seems suspicious.

Key Takeaways

- Artificial intelligence-enabled voice cloning tools are increasingly being used in scams, allowing criminals to mimic the voices of individuals’ loved ones to defraud them.

- The FBI has warned about the rise of these scams, emphasizing that older individuals are more at risk, particularly as these scams become more believable due to AI technology.

- Experts recommend adopting a unique family 'safe word' as a first line of defense against such scams, to verify the identity of a caller claiming to be a family member in distress.

- It’s important for the family to understand how to use the safe word correctly and not to volunteer it during a scam attempt, as doing so could compromise their security.

Have you or someone you know encountered an AI voice scam? Do you have other tips for staying safe? Share your experiences and advice in the comments below. Your insights could help others avoid falling victim to these scams.